In the UK, the term “mind the gap” refers to watching out for the space between the train and the platform. The automated warning is heard thousands of times each day at every stop in the underground. In a general sense, the term means to look after, watch for something, explore what’s missing or what’s not there.

Self-knowledge, Restrictions, and Latency

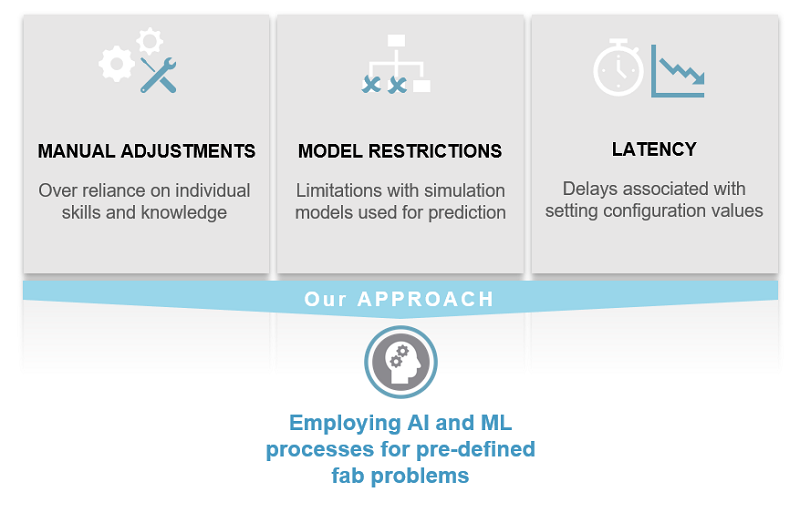

In the current productivity system and control environment of fabs, three key limitations exist that prevent manufacturers from moving on to smart manufacturing (see Figure 1):

- Manual adjustments. For multiple area scheduler and dispatching rules, manufacturers rely heavily on their current skill set and knowledge, which often falls short and is time consuming.

- Model restrictions. From the perspective of model run time and accuracy, clear limits exist with the current simulation models being used for predicting dynamics in the factory.

- Latency. For scheduling, dispatching, equipment and process health controls, latency delays still prevail in setting configuration values due to lack of integration and prediction speed and accuracy.

A Smarter Way

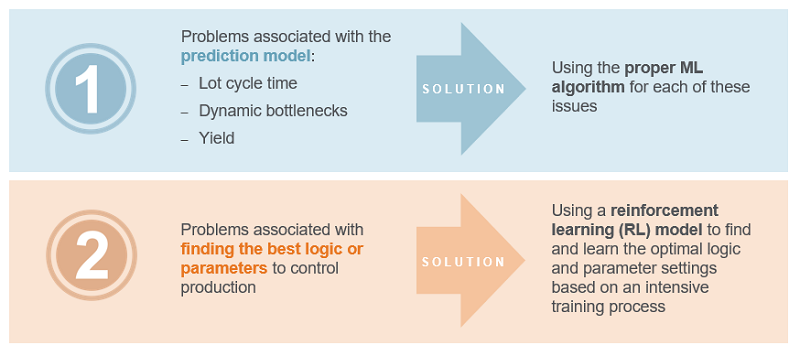

- The first relates with prediction model problems—that is, problems associated with lot cycle time prediction, dynamic bottleneck prediction, and yield prediction. Using the proper ML algorithm for each of these problems offers a good solution.

- The second relates with finding the best logic or parameter values to control the production flow or equipment operations in an optimal way, considering real-time and future status. A reinforcement learning (RL) model could be a good solution to find and learn the optimal logic and parameter setting based on an intensive training process.

Minding the Gap

- Predefined feature factors for solving common productivity problems. Feature factors are a type of statics value like mean cycle time at a step or the average number of lots at a step. Each feature factor represents a certain feature of a lot, equipment, or step. With the AI/ML platform, manufacturers can reuse or modify APF Formatter reports, specifically created for feature calculation of predefined feature factors. By using APF Formatter, manufacturers can easily manage feature factors and feature calculations.

- Ready-to-use ML models for solving common factory productivity and supply chain problems. These models serve as “baseline” solutions that you can freely modify and reuse as needed. You can build and deploy ML models by using the current APF platform in all stages of the ML model development lifecycle—from data preparation to deployment and monitoring. If you are familiar with the current APF platform, you can build your own ML model more easily.

- Ready-to-use algorithms for automatically setting scheduling and dispatching parameters. These algorithms are RL model-based and built around simulation-based optimization.

- Pre-built solution UIs for evaluation and monitoring. These UIs expedite the total process of building and deploying new ML models

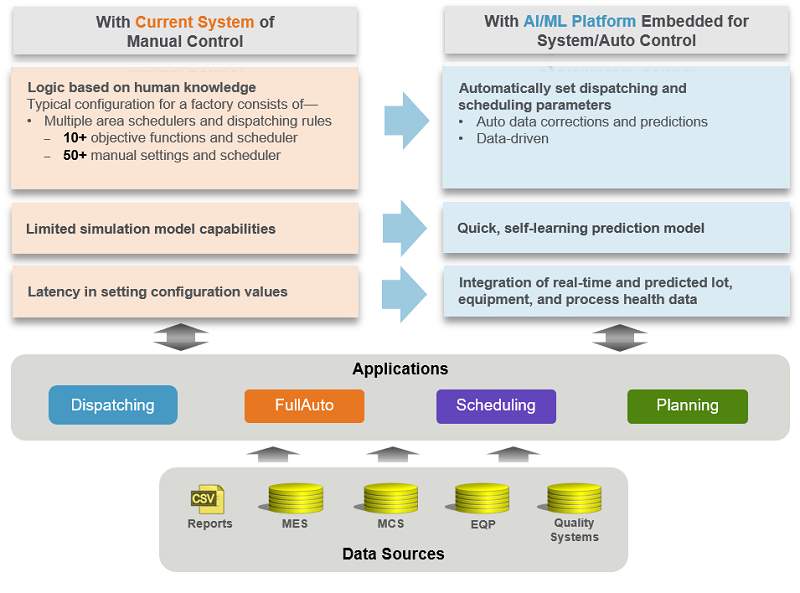

- The platform provides a data-driven approach with ML/RL algorithms, so you can automatically configure or adjust dispatching and scheduling parameters—eliminating the need for manual adjustments. Example dispatching and scheduling parameters include Hot_lot_factor, Prefer_tool_factor, and Step_move_target_factor.

- The platform also provides an ML prediction model capable of executing quick runs, enabling the scheduler and dispatcher to use the results in real-time, improving accuracy. This model even incorporates training automatically with time-based or condition-based patterns, giving you better visibility and quicker response to needs.

- Based on the improved ability of the prediction model, you can more effectively integrate real time and predicted data, eliminating latency delays associated with controlling key configurable parameters.

- The APF Formatter has a Python block that can run a ML model coded by Python, so if you’re using the APF platform for the current scheduling and dispatching rule development environment, deploying ML models will almost be similar with the current rule deployment process. This is a great benefit of the Applied AI/ML platform for current APF users because they can develop and deploy AI/ML models without changing their current development and deployment environment.

- Finally, after deploying a model to production, you must monitor model performance and periodically retrain the model based on performance. In the Applied AI/ML platform, Activity Manager automatically manages this workflow process.

Predicting Lot Cycle Time Using Smarter AI/ML Controls

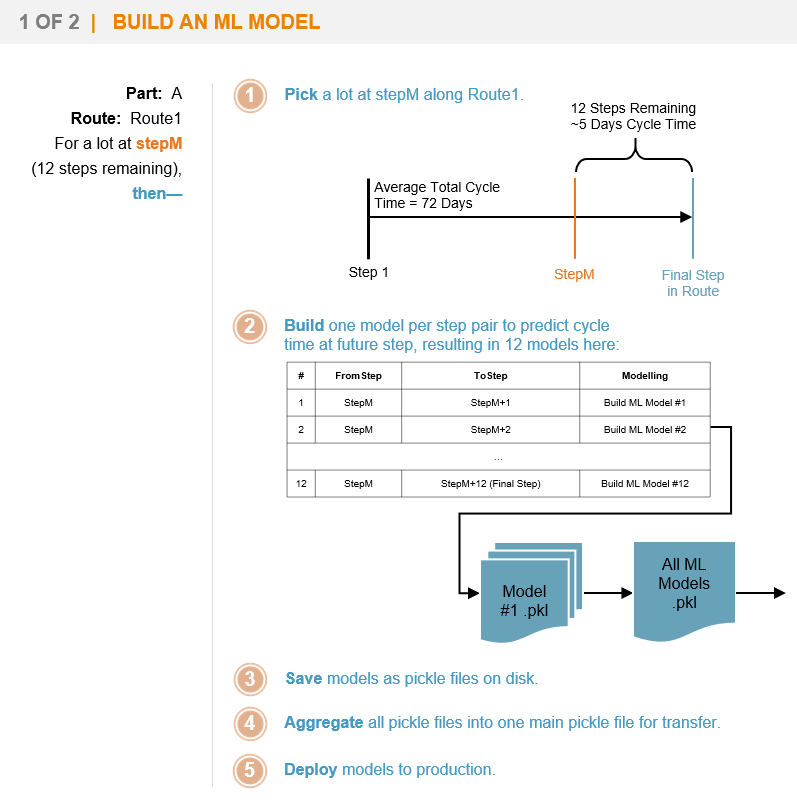

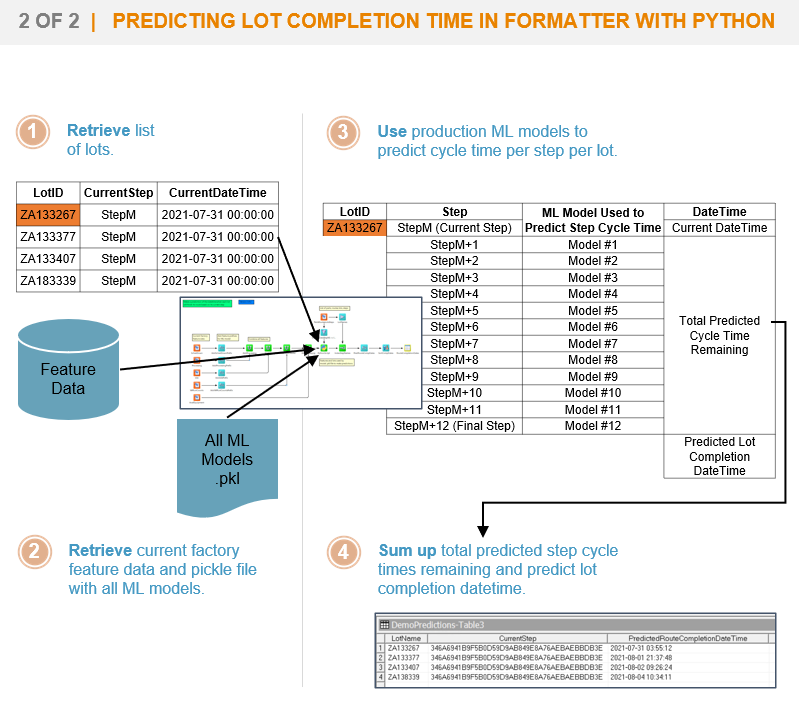

To illustrate the use of the AI/ML platform, we can look at the challenge of predicting lot cycle time as an example. Lot cycle time prediction refers to predicting the completion time of each lot at a step. With an accurate lot completion time prediction, fab managers can check if their daily fab-out plan meets the schedule, so it plays an important role in a successful on-time delivery.

Figure 4 shows how to build an ML model for lot cycle time prediction (steps 1–5). For a lot at a step, if 12 steps are remaining until fab out, then 12 models will be generated, one model per step pair, and each model will be trained. And aggregated model files will be deployed to production.

Conclusion

Are you mindful of gaps in your factory data? How well do you manage predictions for lot fab-out events, lot step arrivals, and tool down events? The productivity AI/ML platform is a set of tools for problem solving issues associated with such events. It powers automated processes and makes manufacturing equipment and processes more efficient and profitable. These tools support the general AI/ML model development and deployment lifecycle by supporting efficient feature calculation and management. And the platform enables manufacturers to easily deploy an ML model with their current scheduling and dispatching rule development environment. After deploying a model to production, manufacturers can monitor model performance and retrain the model based on performance and significant fluctuations in the fab.