Anatomy of field returns

What eludes the supply chain

The constituents of the current supply chain – fab, packaging, and electrical test – can be evaluated as detection gates, with an increasing granularity of detection, but also in the order of increasing cost.

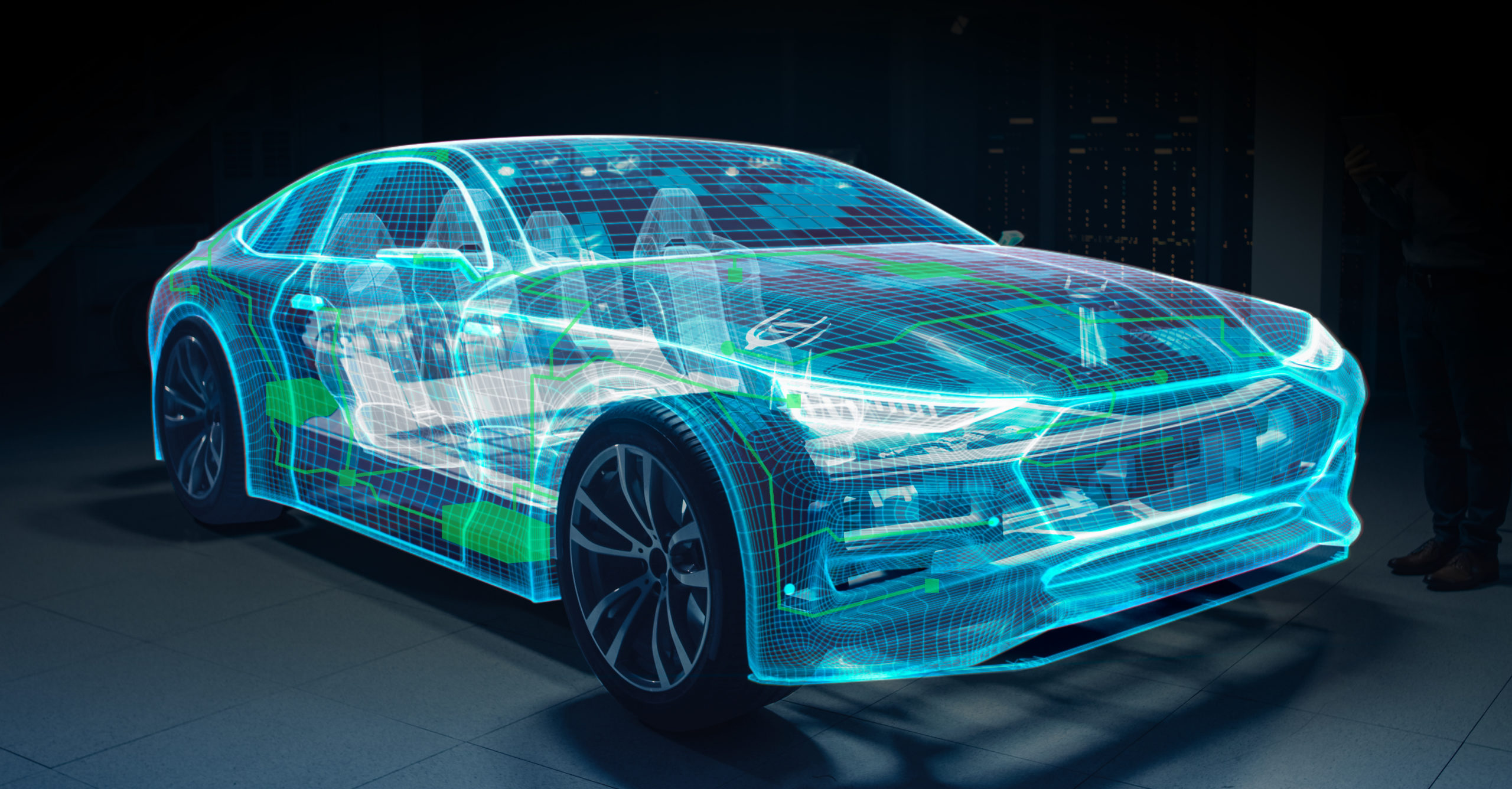

From a wafer fab perspective, automotive components can be broken out into the following buckets: safety/advanced driver assistance systems (ADAS), propulsion, and infotainment. First, the safety/ADAS bucket includes sensors (microelectromechanical systems [MEMS], optical, temperature) that are made on larger nodes, or radio- frequency (RF) components in specialty fabs (GaAs, GaN, SiGe, and so forth) with a lower than average level of automation and detectability. Second, propulsion is an increasingly large group with the advent of electric vehicles (EV) and can be anywhere from 180nm to 32nm. This bucket includes power components and engine control units (ECUs). These items would be considered mission-critical components in a way, requiring a higher level of reliability. Finally, infotainment does not warrant the same level of reliability and has some flexibility in terms of sampling the latest and greatest fab nodes.

Given the wide range of fab nodes that are sampled in the automotive supply chain, we are subject to a variety of available quality levels. Reducing cost per component to maximize profit also means reducing the acceptable quality level to an acceptable bare minimum. Every detection step is a non-value-added step, and hence the cost is passed off further downstream. This means that even with the best fabs in the business, PPM-level detection is neither offered, nor discussed for parts that are in volume production. This situation deteriorates further as older nodes or specialty technologies are used.

The best chance for detecting defects on a part is with electrical testing, which entails wafer probe or final automated test equipment (ATE) in packaged form. Conceptually, if the part has been characterized thoroughly and built on a known technology with a low defect rate, then any remaining PPM-level failures can be captured at the electrical test step. Characterization depends on: 1) the design failure mode and effects analysis (DFMEA) being able to simulate all failure modes; 2) product engineers being able to test the parts to cover all customer mission profiles; and 3) with increasing software components in the parts, ensuring that data fidelity is maintained throughout.

Most parts do not have wafer-level traceability, which further degrades the ability to tie back failures to fab processing as warranted. In the face of meeting ultra-aggressive customer timelines and cost pressures, we again see the acceptable quality level of this stage reduced to the bare minimum, which ensures that the outgoing product does not receive the full benefit of detection at electrical test. This translates to most customer failures being test-related issues and increased costs of non-quality and further results in the motivation to move towards a holistic approach as a matter of progress and safety.

Framing the issue

If we are to consider a strategy to move the needle closer to a zero-defect concept, then several things must change from the way facilities operate today. Most of these legacy facilities are semi-automated or manual. This means that they fundamentally have a multitude of point solutions and disciplines to govern their quality standards. Simply put, they neither capture all the data needed to govern their processes effectively, nor analyze

this data in a manner that allows for speedy and effective feedback. Based on field returns and internal failures, we have seen how far this strategy can take us. It is unlikely that we will be able to breach the 1PPM barrier consistently without rethinking the process entirely.

To paraphrase the problem, we have an inability to scale our detection, coupled with the inability to assure our test coverage compounded by an inability to assign root cause with total certainty in too many of our cases. All these problems result from a patchwork approach to quality and a siloed perspective on quality data management. A holistic approach would streamline information sharing and facilitate first-timeright decision-making (Figure 3). So why haven’t systems evolved to be holistic?

Adopting the needed changes

Cost of implementation and training

The rate that semiconductor fabs accommodate new automation solutions poses a barrier to being able to quickly change the quality capabilities of the fab. Most automation systems today are still fundamentally built around the same paradigm seen over the last 20 years—charts driven by Western Electric rules and out-of-control action plans (OCAPs) that are based around the errant chart. By the time the industry accessed streamlined automation solutions, legacy manufacturing facilities had long since amortized their building costs and depreciation regimes, running the business with just the operational essentials. This situation left minimal resources for new development because the revenue capacity of these facilities does not easily support the required investment to resolve any singular problem.

Holistic automation solutions address systemic problems rather than single gaps. Half a million dollars can easily represent half a percent in profits depending on the facility, which is a significant erosion to margins. Furthermore, the return on investment is either too small to justify the risk or takes too long to achieve. Point solutions can easily range from $150K to $750K, which is a difficult barrier to breach. Understanding them requirements and the intricacies of the domain and the information technology (IT) infrastructure required to support it takes a substantial investment. There is a significant cost to define and test disruptive systems that can replace the multitude of current automation practices. Unless a solution can provide a systemic resolution to quality – meaning resolve several high-value targets – these facilities will not be able to invest. These paradigms need to straddle the entire supply chain.

Most personnel in these facilities are not focused on developing new automation solutions, but rather on manufacturing reliable parts cost effectively. So, accessing the technology to build a holistic and streamlined quality system is not a realistic expectation for these facilities. It would require a financial and manufacturing mindset change. A strong holistic quality program requires both automation and learning systems for those responsible for operating it. While numerous opportunities exist to automate tasks and decisions that historically were manual, the expectation will remain high on understanding the meaning of signals and quality metrics.

Moving to zero defects

There is certainly a hierarchy of automation need in legacy facilities. Automated data acquisition is a good starting point, followed by a centralized alarm management system to connect signal’s and prevent moving materials into process tools that should not run product. A significant need also exists to reduce human error as part of the processing. Recipe management systems play a key role as does centralized configuration management. After the automation layer is in place, the ability to expand the capabilities increases. For instance, once the statistical process control (SPC) practices of the fab access the raw data of a wafer measurement, the engineering community can make use of it to isolate within-wafer variation problems. Qualifying new products is time consuming and preparing for production can be slow and prone to missing opportunities to define needed tests. The more understood the variation sources of a facility, the more opportunity to quickly qualify new products. This knowledge will directly impact the gap of test coverage that allows 30% of field failures to persist.

Moving these traditional good practices to a holistic approach requires some integrated infrastructure. The first step is to define what holistic means in this context. Holistic is the ability to understand the interdependent behavior of the process steps. The control plan illustrates the known measurements associated with any product. The automation system will need to provide users with the ability to define interdependency relations of different process steps, as outlined in the control plan and process failure modes and effects analysis (FMEA). At runtime, the system will consume the data coming from multiple process steps, as defined in the control plan, and outline which data does not fit the population that we

expect for the specific processes. This will need to be done using real-time data tools because a single decision will require users to assess 15 parameters with 12 to 20 sites for each. Each site will need to have a statistic and be broken down to divulge within-wafer variance profiles, wafer-to-wafer variation within the same process step, and finally, variation inherited from upstream steps. This approach will reduce the variation arriving at final test and therefore, reduce the chance of failing parts leaving the facility. More important is the change that this approach will impose on final test validity. A more stringent variation verification will become more effective to capture a greater degree of nonconformance.

Another key difference is that holistic systems are not only error driven, but also sensitive to variation within the specification limits. Data shows that this is happening in facilities that have yields ranging from 88% to 92%. The frontend fabs combined with the packaging operations account for an approximately 0.76PPM failure rate. Devices within the specification limits will ultimately be functional, but not necessarily the same from a performance or longevity perspective. Process problems such as whiskers and bridging will elude many of these tests, causing shorts in the field. So, the automation system provides users the ability to define qualitative stack-up of step attributes, which is a measure of acceptable variation for a

given problem.

It is true that other factors play a role in the overall failure rate including electrostatic damage and other forms of mishandling that can occur in several places throughout the supply chain. This highlights the need to have rapid genealogy of quality attributes that include design. Design is the concept of parts that have been made for a long time but are now used in a new way that is not suitable for their reliability. This constitutes an approximately 0.15PPM contribution to failures in the field. The cases that are “unknown” represent an approximately 0.21PPM contribution to the failures and are a major liability to the vendor. In the case where no cause can be assigned, the small vendors will be held accountable for the failure and will have the cost deducted from their agreement with the big car manufacturers. If this ability could be automated to straddle the front end and back end and ultimately include the surface mount technology (SMT) line, then rapid identification would become possible for any given device and cause. The speed of resolution in this case will impact cost of liability and more importantly, safety. Unless the design of new automation systems adopts these guiding principles, it will be difficult to expect any supply chain to converge effectively towards zero defects (Figure 4).

Original Equipment Suppliers Association

Automotive defect recall report2019